Certain things must remain human roles

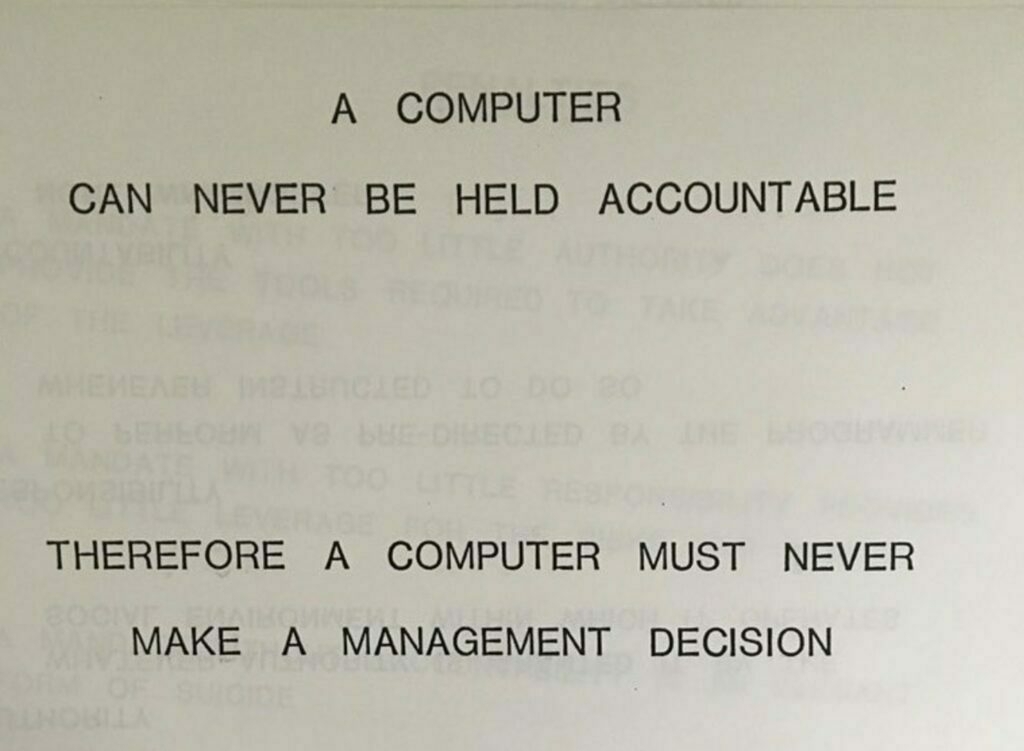

I’ve come across this image while browsing through my RSS feeds this week. Produced in 1979 by someone at IBM — although it’s not clear to me whether it came from an internal meeting presentation slide or a training material, not that this matters —, the sentence the image brings was presented in the context of future technology, both hypothetical and real.

What called my attention and made me think about writing a post was the sentence, “A computer can never be held accountable. Therefore a computer must never make a management decision”. To me, despite being from roughly 40+ years ago, it completely relates, in 2023, with all the artificial intelligence hype, specially when everyone at Sillicon Valley seems to have elected it as a kind of panacea for every humanity problem.

I’ve been meaning to write about it for some time now, so here it goes: I believe that, despite all this hype around AI, all the talk about how it could outperform humans and take our jobs is a big nonsense. Contrary to Geoffrey Hinton, the supposed “godfather of AI”, who, as Paris Marx recently put, believes AI is now very near the point where it becomes more intelligent than humans, “tricking and manipulating us into doing its bidding”, I’d guess that’s something very far from happening at all.

For one thing, AI faces several challenges of its own already, among which the environmental impacts its required processing power may generate, the difficulties and social impacts its algorithms can generate, denying someone credit or by mistakenly taking humans for monkeys. Recent AI has a long road to go down while improving on its own.

Language models, Chat GPT being the most hyped one, although sounding very reasonable while providing their answers, are nothing but parrots in the sense they acquire big amounts of raw data — texts from all over the internet, therefore varying largely in quantity, quality and veracity — and recombine, remix and rewrite them to create a mostly reasonable, human sounding answer. Don’t get me wrong, this is amazing, as it is very difficult to create. But to me it isn’t enough to support the hype that goes by, calling a LLM “intelligent”. LLMs don’t have the ability to think, let alone reach the level of human intelligence as AI currently is. They need training (by humans), need continuous adjusting and refining and yet are susceptible to errors, inconsistencies and hallucinations. And as such, again in my opinion, they’re subject to that sentence in 1979: they cannot be held accountable for what they produce, or say. They are so not intelligent that as soon as an error is made, it takes a human to readjust and rewrite its program so the decisions are corrected from that point on. This means their intelligence will always be as good as the limits of the algorithm.

Finally, there are roles that must remain performed by humans. Last January I was listening to this episode of the Tech Won’t Save Us podcast where Timnit Gebru, CEO of the Distributed AI Research Institute and former co-lead of the Ethical AI research team at Google, talked, among other things, about neural networks:

“For some people, the brain might be an inspiration, but it doesn’t mean that it works similarly to the brain, so some neuroscientists are like: Why are you coming to our conferences saying this thing is like the brain?“

— Timnit Gebru, on neural networks

Neural networks have been inspired by the human brain. By trying to emulate the brain waves and electrical pulses that get in motion when we think and when we make decisions. But they’re not a copy of our brains. Nor work exactly like it. Nor can decide like we decide, no matter how well trained it is. So, there we are again, back in 1979: computers can’t be held accountable.

But imagine for a moment computers could think and artificial intelligences could make decisions. The idea of complex, not transparent, autonomous systems taking care of our finance systems, food supply chains, nuclear power plants and military weapons systems would be horrible. The same if our doctors for example were replaced by robots. The same if out teachers. In all these situations, decisions need to be made by human beings, so the roles must remain with humans. And if that ever changes, in my opinion, it will be the irrevocable sign humanity has lost itself.

Lucky enough, these scenarios are (still) dystopian books scenarios.